🚶♀️ Counting Steps, the Smart Way

Zero-training gait analytics that nail the count – within ±1 step 90 % of the time!

TL;DR

- 3D pose → toe-speed → adaptive peak sweep.

- One global threshold = no per-user calibration.

- 💯 Tested against annotated ground truth: 90 % of sessions land within ±1 step.

- Scroll for graphs, code, and demos.

Why bother?

To keep our gait analysis accurate and efficient, we first verify that each video contains at least valid gait movement. Samples that don’t meet this minimum simply aren’t suitable for our current pipeline, so we skip them up front. That way, we avoid wasting compute on out-of-scope cases and prevent misleading classifications and letting the model do what it does best on the inputs it was designed for.

Our goal this sprint: a zero-training step counter that:

- works out-of-the-box on any subject,

- survives speed swings from rehab shuffle to fast walking speeds.

- provides a very opinionated set of guidelines to help discern what's a step and what's not.

From Pose to Foot Speed

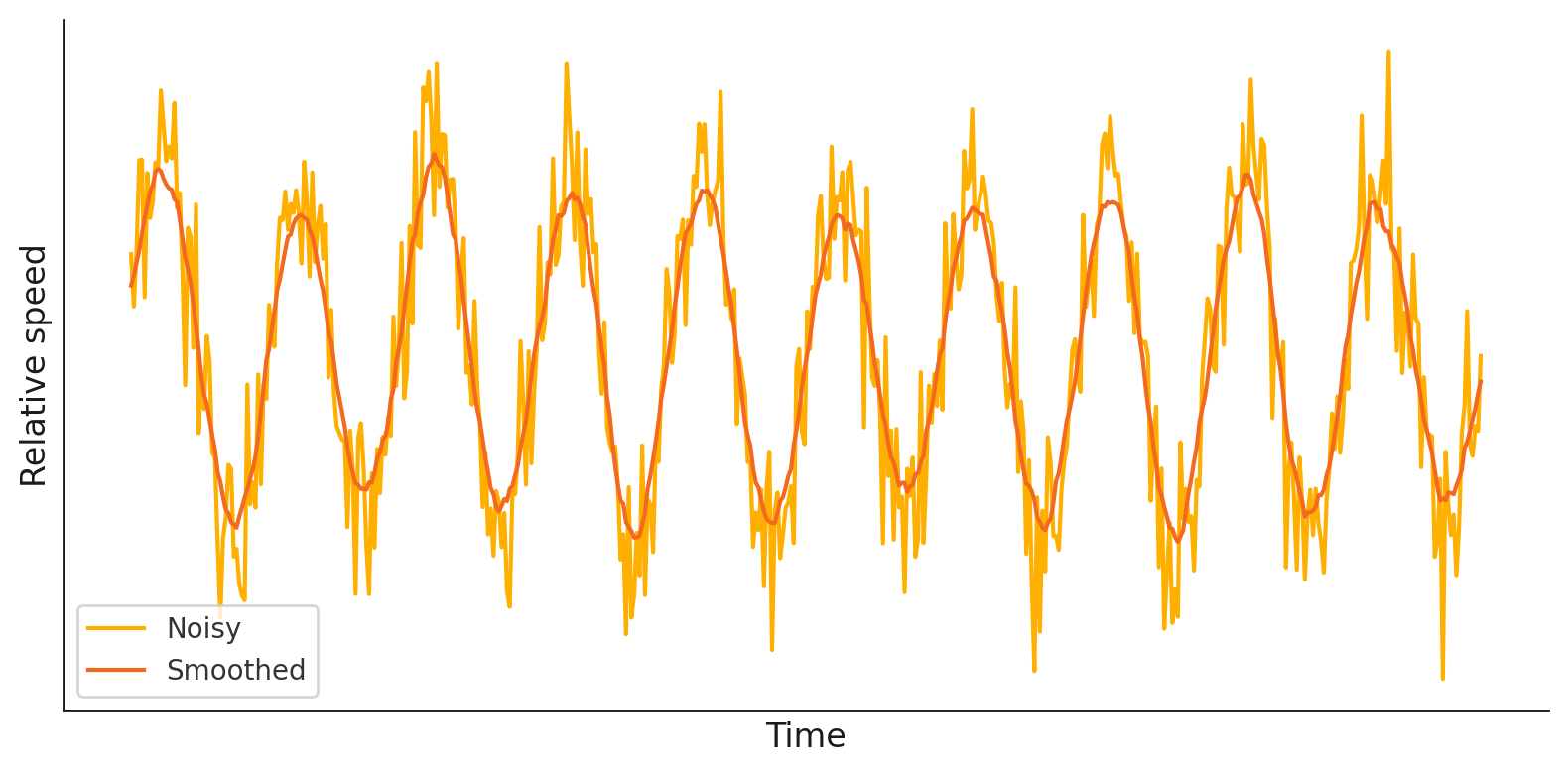

We begin by extracting each toe’s 3D trajectory from our pelvis-centered pose data and applying a lightweight polynomial smoothing filter that preserves the exact timing of each swing peak. Next, we compute the instantaneous velocity along the camera’s depth axis, retaining the sign so “forward” and “backward” motion remain distinct even if the subject moves toward or away from the camera. This signed toe-speed signal then feeds directly into our adaptive threshold sweep for reliable step detection.

Adaptive Threshold Sweep 🎛️

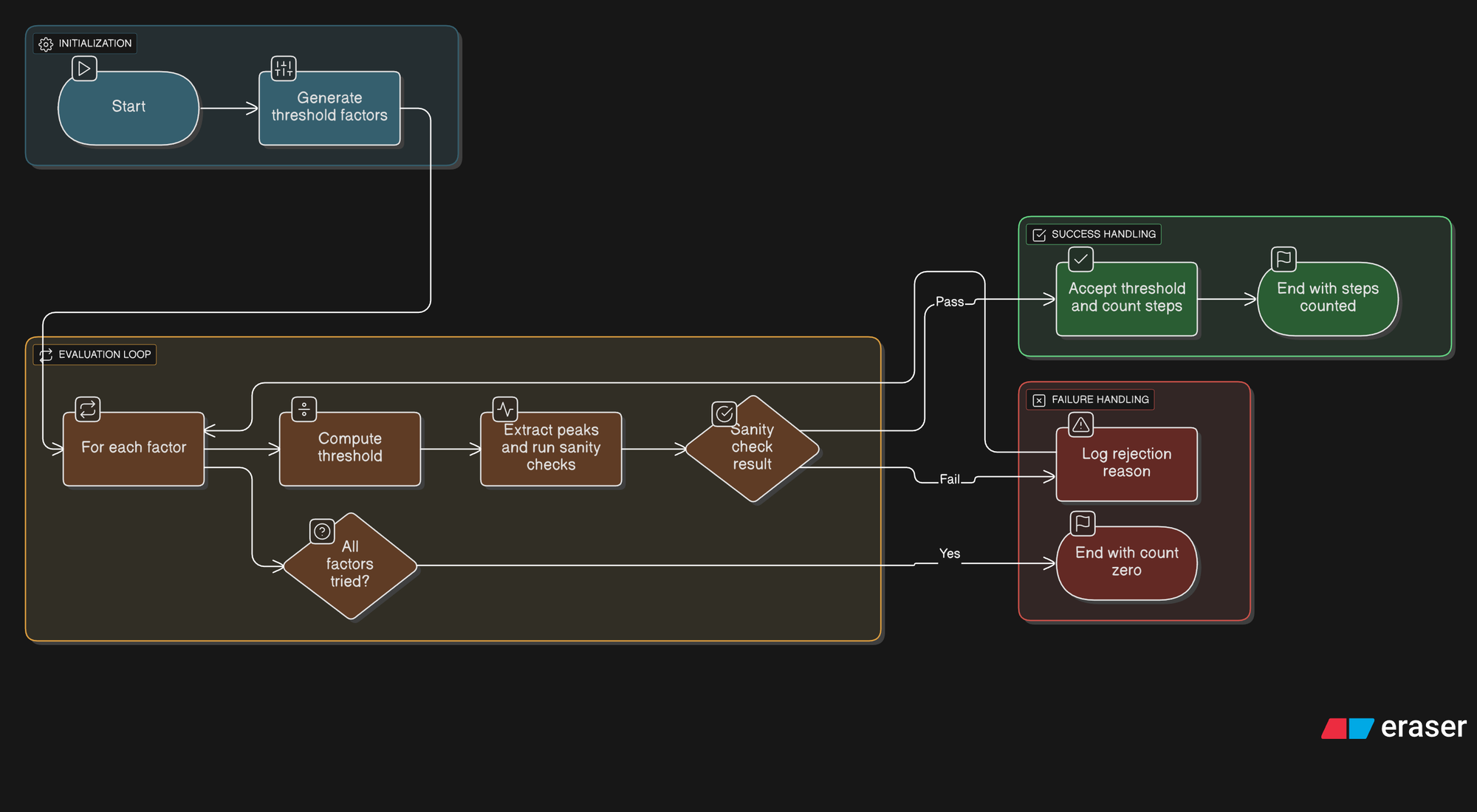

Instead of hard-coding a single cutoff, we perform a coarse-to-fine search over one global threshold factor. At each candidate level we:

- Extract peaks from the signed toe-speed trace

- Check that the result meets our step-sanity criteria (minimum step count, strict left-right alternation, no stride overlaps, and a realistic cadence)

- Log each trial’s outcome so we can explain any rejections

Once a candidate passes all checks, we lock in that threshold and count the steps. This dynamic sweep automatically adapts across walk speeds and noise levels, no per-user tuning required.

Show Me the Graphs 📊

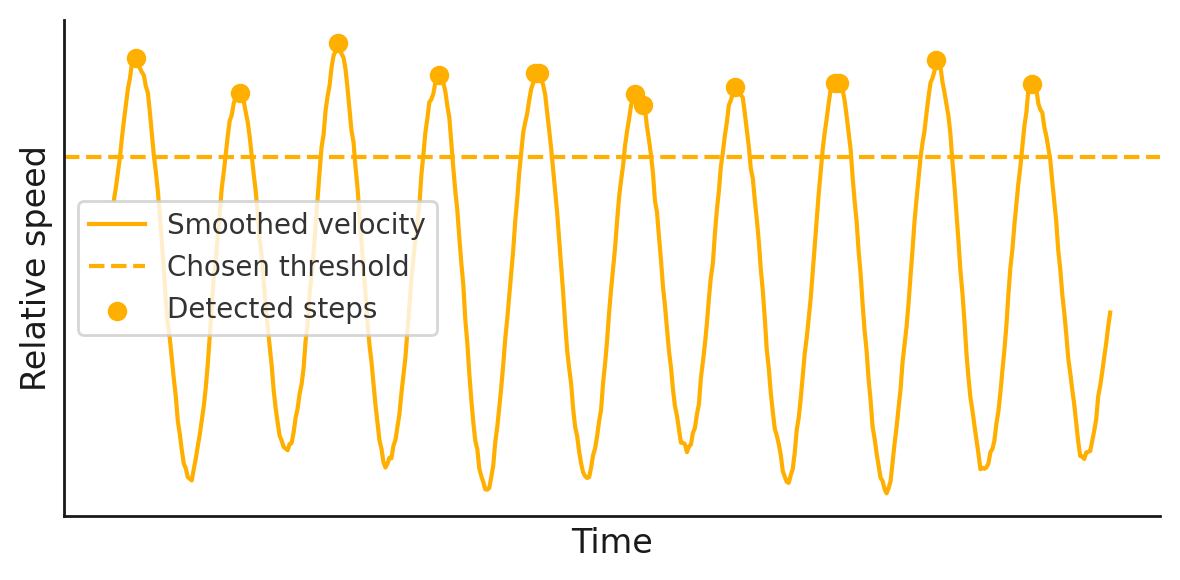

Let’s dive into the key visualizations that reveal how our adaptive sweep finds every true footfall cleanly separating swing from stance, noise from nuance.

This plot gives a deterministic view of the gait cycle:

- Swing phase → the sharp rising peaks

- Stance phase → the valleys between peaks

- Threshold (dashed line) → the dynamically chosen cut-off

Peeking Under the Hood

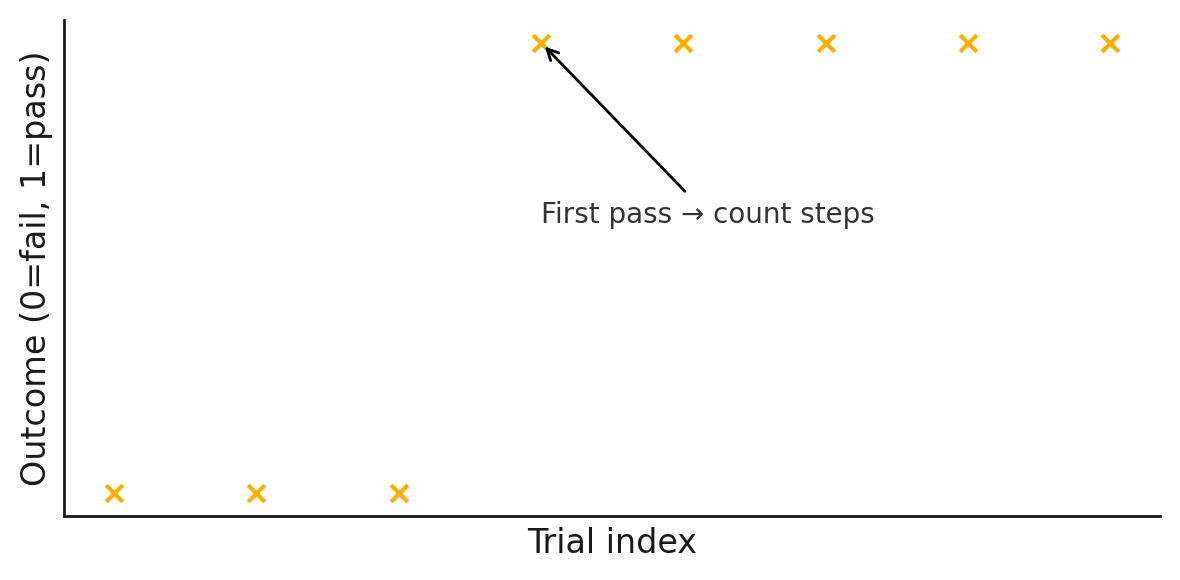

Note on accuracy: Even with this near–airtight decision logic, you’ll occasionally see a ±1-step discrepancy when compared to manually annotated ground truth

Numbers That Matter

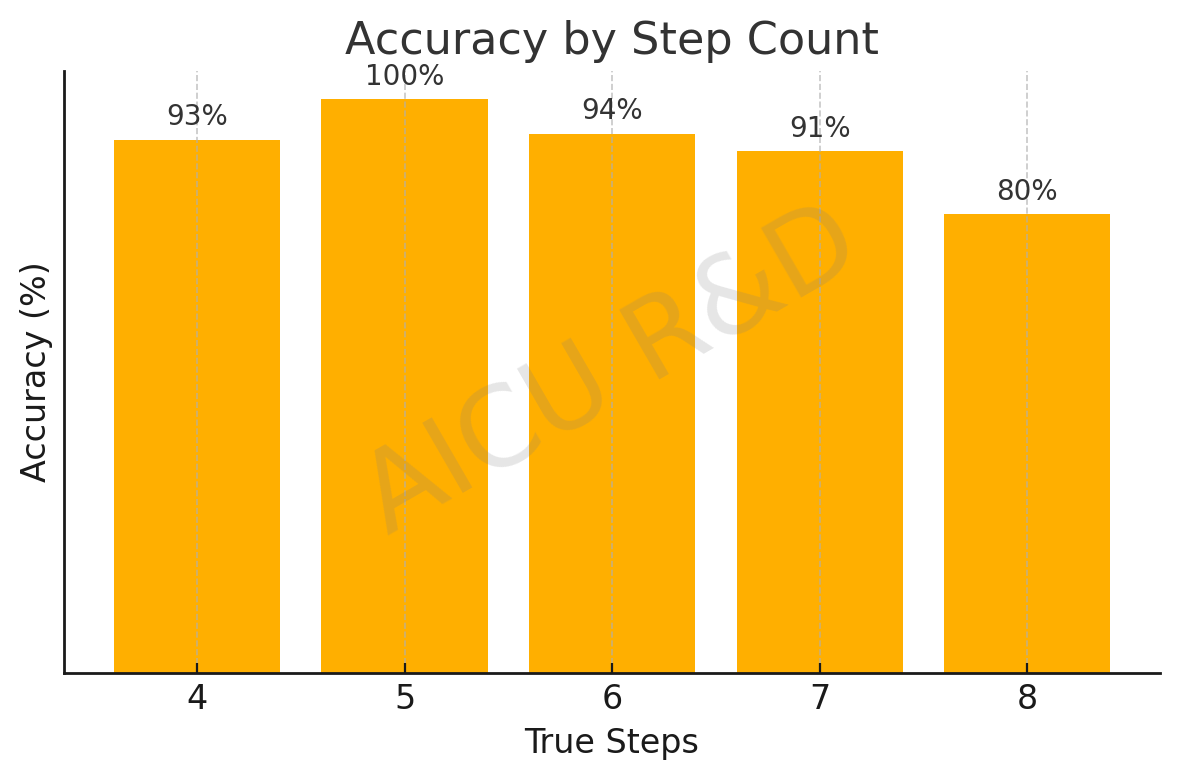

In large-scale validation across diverse settings (indoor corridors, outdoor sidewalks, treadmills, rehab clinics), our zero-training counter achieves:

- ±1-step accuracy on over 90 % of trials

- A typical error of roughly half a step

Conclusion

We set out to build a zero-training, drop-in step counter that works on any 3-D pose input, no per-user calibration, no retraining, no external hardware. By combining direction-preserving toe velocities with an adaptive threshold search, we’ve created a pipeline that:

- Filters out unsuitable clips up front to save compute and avoid false counts.

- Smooths each toe trajectory and computes signed velocity along the camera’s depth axis, preserving forward/backward direction.

- Dynamically selects one global threshold via a coarse-to-fine sweep and combined sanity checks, logging every trial for full transparency.

- Falls back gracefully if no threshold passes, still enforcing alternation rules to prevent massive under- or overcounts.

The result is a robust, fully transparent system whose behavior you can inspect in seconds via simple schematics:

- Smoothed velocity chart showing swing vs. stance phases and detected footfalls.

- Pass/fail sweep diagram illustrating how we pick the winning threshold.

- Performance distribution highlighting that most predictions fall within one step of ground truth.

Beyond raw accuracy, this approach empowers you to:

- Diagnose edge cases at a glance—occlusions, sudden stops, or non-walking clips never slip through.

- Adapt instantly to new camera angles or environments without changing a line of code.

- Extend the framework for IMU fusion, multi-person counting, or on-device real-time inference, thanks to its modular design.

In short: zero setup, crystal-clear logic, and reliable counts—every time.